AI Slop

What is the function of AI?

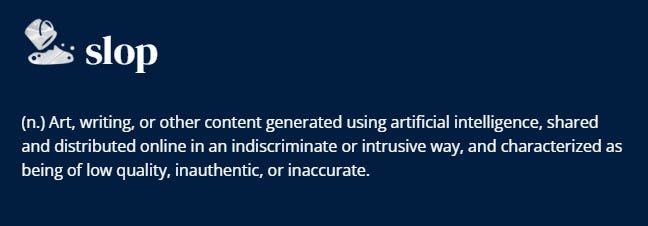

A few weeks ago, the Oxford Dictionary announced the shortlist for the 2024 Word of the Year. Among the contenders was slop, defined as:

The term was in the running for Word of the Year, as Oxford explains, following a 332% increase in its use to describe material produced by LLMs—content that is “often viewed as being low-quality or inaccurate.”

Since ChatGPT's public launch, we've been figuring out what it's useful for, when to use it, and when not to. The answer has evolved over time. Schools have adopted new rules and companies have established their own codes of conduct.

But it’s still a moving target. We’ve built consensus and imposed restrictions that, in most cases, remain flexible depending on the capabilities of the current model. No one trusts ChatGPT’s math skills, but it is useful for writing email marketing spam.

Where probably this tech is more universally accepted is in image and video generation. Even the use of AI in this are has become, sometimes, a badge of pride. As seen in various commercials boasting the label “100% AI-generated.” Coca-Cola is one of the latest examples.

For the company (and AI bros), the sole value of this ad lies in its origin. It’s a badge of pride.

But, in most other cases, the label “AI-generated” is a reason for disdain.

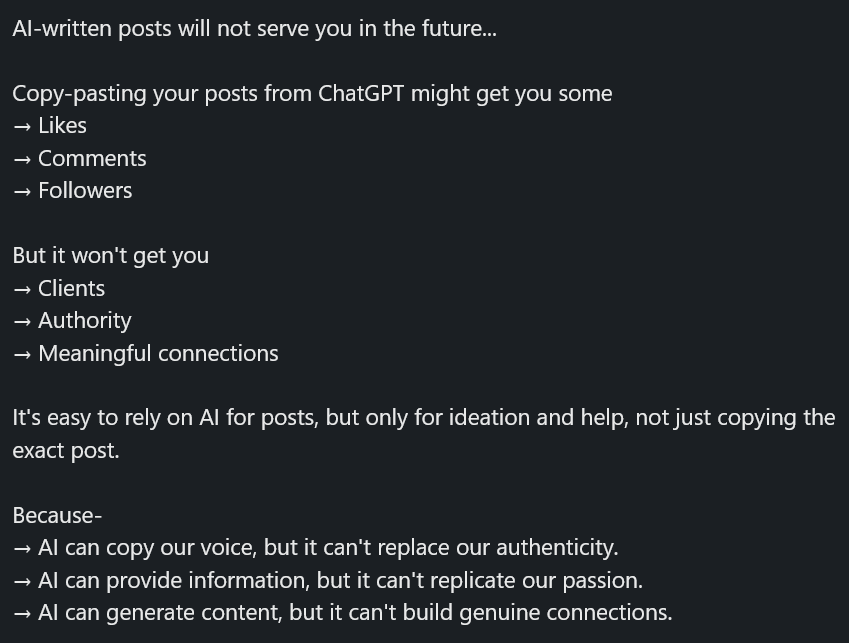

In LinkedIn I’ve seen plenty of examples of the latter.

People “writing” with AI about AI content. AI content about AI content, and so on. People angry, mad and frustrated with an apparent lack of genuine intention or effort.

What this is, is people feeling cheated. Deceived because they are expecting to be talking with someone, that behind a post there’s someone with an intention, and idea.

But behind the slop, there’s no one.

In this case, the “AI-generated” label is a mark of deception. When we try to pass off a text written by AI as something we made, something that came out of our own labor, time and attention.

AI slop is deceptive. It triggers similar feelings as plagiarism does.

I’ve seen websites declaring the use of AI in the creation of images or texts. But in these cases, are we willing to actively seek out this type of content? Mostly no.

Recent studies have shown how AI is capable of generating results that pass as human-made. But the point of these studies is solely to demonstrate the capability of AI. People do not actively seek out this content (especially texts).

When we use AI, we’re able to deceive and not get caught. We praise AI when it manages to pull this off. OpenAI, for example, claims to have developed software capable of detecting AI-generated content with 99% certainty. However, they refuse to release this product, arguing it would harm those who use these tools to write in a language they don’t fully master.

But isn’t that, in itself, a declaration by OpenAI that the purpose of these tools is, in fact, to deceive others?

So, what is the function of AI?

When do we have the “license” to give an answer without the need for thought? When do we have the license to give an answer detachted from our intentions?